Autonomous System Safety by Phil Koopman – Blame-Free Robotaxi Crashes Are Still Crashes

See full article by Phil Koopman at Autonomous System Safety

Which robotaxi crashes should “count” for robotaxi safety metrics? Some say that all crashes should count. Some say that only at-fault crashes should count. And some discussions about robotaxi safety say things like “no fatal crashes” when they should be saying “no at-fault fatal crashes” — which are two quite different things.

The arguments for and against using blame as a filter for which crashes count is tricky. So let’s try to sort that out. For example, when some robotaxi company claims that their vehicles harm fewer people than human-driven cars, should that be based on all crashes or just at-fault crashes?

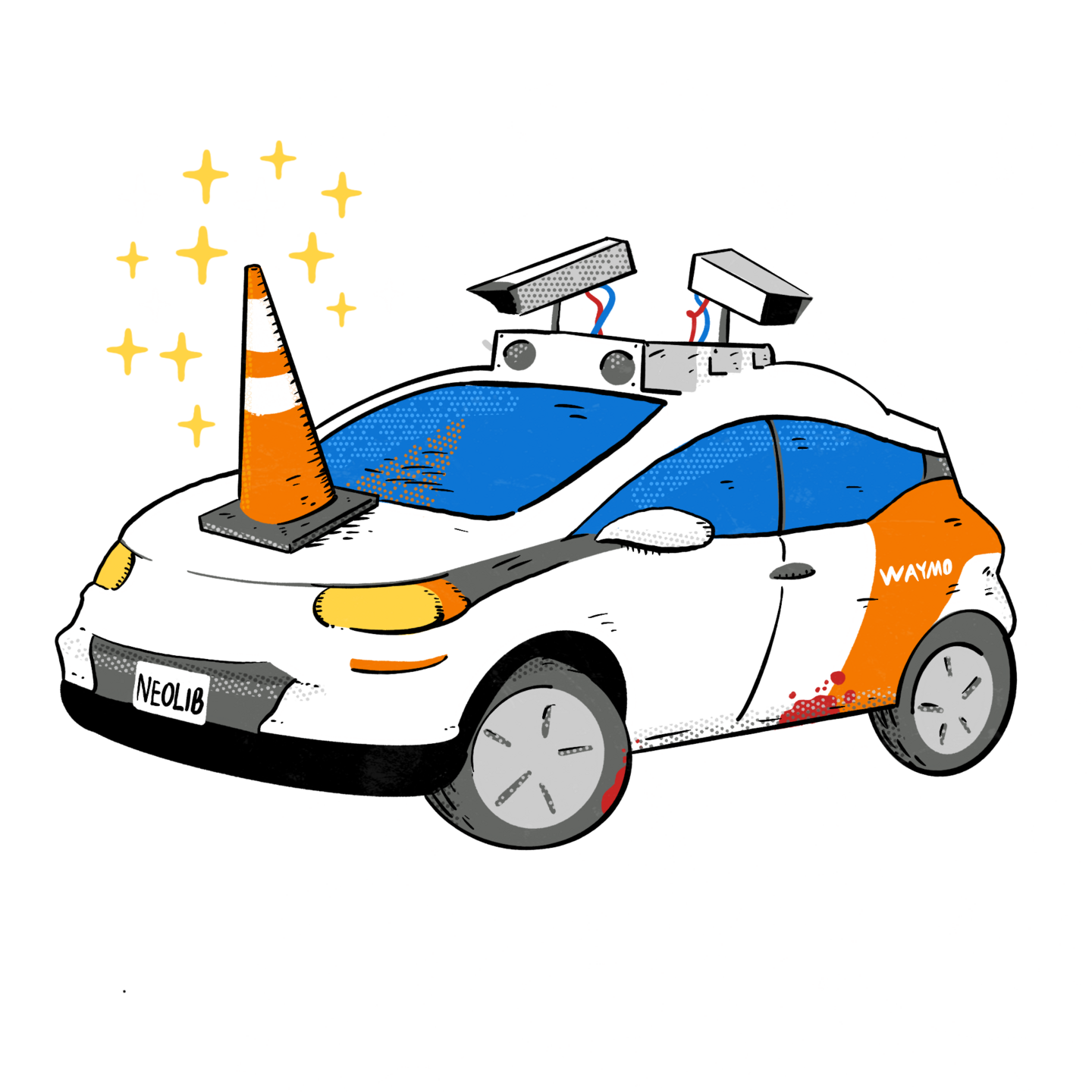

Some robotaxi industry supporters, especially some vocal ones advocating for Waymo, want only at-fault crashes to count. Others (including me) have held that this metric is too easily misleading as the only metric. I’ll try to summarize the arguments and propose a metric approach based on my recent work on redefining safety principles for embodied AI safety.

… (see full article)

If you are an advocate of only-blame counting, you are implicitly bolstering the argument that an all-robotaxi fleet will mean no road deaths because they will never be at fault in a crash. And certainly this is a message being sent by the industry whether they say they are intentionally doing so or not. But in a world in which some crashes have no clear blame, avoiding blame is not avoiding crashes.

It might seem intuitive that if nobody is to blame there will be fewer (or perhaps no) crashes, but that will not automatically be the case. For the next decade and more, there will be a healthy mix of human drivers. Behavioral incompatibilities in a mixed human/robotaxi fleet might (or might not) increase crash rates depending on behaviors of different brands of robotaxis. And even if, decades from now, all vehicles are robotaxis, there might be emergent effects that cause fewer at-blame crashes without reducing total harm as machine learning gets clever at avoiding blame. One hopes avoiding blame will help, but it is not a guaranteed outcome.

See full article by Phil Koopman at Autonomous System Safety